Go Back

Go BackShare

Demystifying the Science of Making High Quality Assessment Questions

By Aditi Nangia

Nov 28, 2021

The National Education Policy (NEP) 2020 lays down an important goal: improve learning outcomes; shift from rote-based learning to conceptual understanding and application of knowledge. It advocates for competency-based assessments at all levels to achieve that goal by way of assessments at key stages of grades 3, 5 and 8, and reforms in board examinations.

The National Education Policy (NEP) 2020 lays down an important goal: improve learning outcomes; shift from rote-based learning to conceptual understanding and application of knowledge. It advocates for competency-based assessments at all levels to achieve that goal by way of assessments at key stages of grades 3, 5 and 8, and reforms in board examinations.

The quality of questions (called items) is a key factor that determines the quality of assessments. A good question challenges and stimulates a child to think deeply and to apply concepts. The data generated from such high-quality assessments can help a teacher understand where students stand vis-a-vis learning outcomes and what their thought process is like.

Teachers involved in creating questions highlight that these two things are most critical in designing high-quality assessments: i) Questions that test sharply defined competencies and learning objectives; ii) Creating high-quality distractors (incorrect answers that students might choose as the correct answer) to identify misconceptions in students’ understanding.

CSF interviewed Nishchal Shukla, the Vice President (Content Development and Educational Research) at Educational Initiatives, to better understand the features of high-quality Multiple Choice Questions (MCQs). And how to build teacher capacity for making thought-provoking MCQs for assessments in India and what it entails institutionally.

What are MCQs?

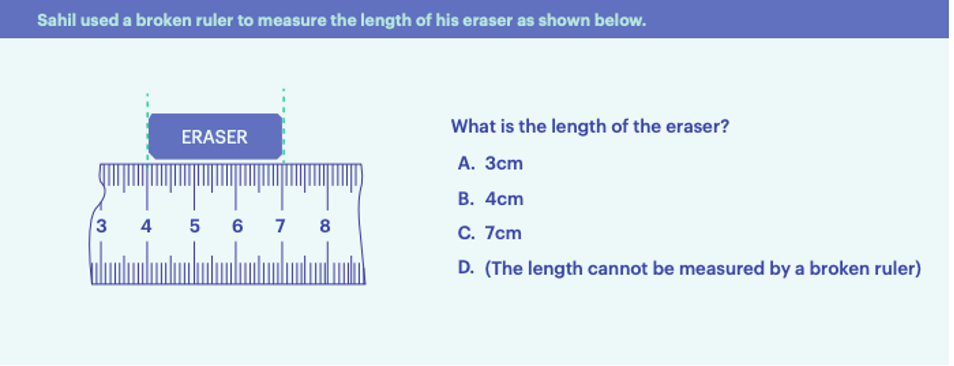

An MCQ has 3 parts: a prompt/; question; and (typically) 4 options to select the correct answer from. In high-quality MCQs, the incorrect options (also known as distractors) are indicative of common student misconceptions, so that the teacher can identify the gap in learning based on the incorrect option selected by the student.

Q1: What role do high-quality competency aligned questions play in large scale assessments such as the recently conducted National Achievement Survey (NAS)?

Nishchal: The quality of questions and the way we ask questions is at the heart of any assessment – both for class tests and large-scale assessments such as NAS. One use case for large-scale assessments is to use it as a standard-setting tool for comparing results across districts, states, etc., also known as benchmarking; and the other is diagnosis of learning gaps to identify areas for building teacher capacity. Diagnosis depends on the tool’s ability and reliability to assess gaps and insights. If the idea is to diagnose misconceptions, questions have to be geared towards that. If the idea is to understand gaps in student learning then that has to be kept at the centre. The amount of time dedicated to item building by any agency/ State should not be underestimated.

Q2: What are the key features of competency aligned MCQ questions? What’s the most challenging aspect of making these questions for grades 1-12?

Nishchal: The area of MCQ question creation has various aspects—

1. Competency (what is tested) and how to create an item that tests competency: this includes identifying a few ideas that are worth assessing from a topic in 1-2 questions. This is the most effective way to test a particular concept and capture diagnostic data of specific gaps that students may have. You start with the objective, and then you draft the question.

For example: In the concept of Area and Perimeter, the chapter may talk about perimeter without referring to it as perimeter, defining perimeter, formulae for calculating perimeter, etc. If I really want to check if students understand perimeter, I may want to assess which kinds of shapes have perimeter (2D, 3D, etc.)

2. Question making has a few rules, but beyond a point, it becomes an art: the type of real life contexts used and how one frames the question are very important. The way a child will respond to an unfamiliar question is different from how a child will respond to a familiar question. Again, time required to create such questions is generally underestimated, and teachers feel that they’re not given enough time to create high-quality questions and distractors.

To come up with a high quality of distractors (incorrect options), either the teacher relies on their experience in the classroom, or something they’ve read or heard. However, we need repositories of misconceptions which are currently not available in India. And repositories can only be built through analysis of assessment data.

Here are some tools that can help teachers make good items:

- Good question bank with past data on student performance on those questions

- Repository of common misconceptions for every topic and grade

- Curated international question bank to refer to for ideas, with data on performance of those questions

- Bank of contexts/ case studies and stimuli that can be used by teachers to make questions

Competency (what is tested) and how to create an item that tests competency: this includes identifying a few ideas that are worth assessing from a topic in 1-2 questions. This is the most effective way to test a particular concept and capture diagnostic data of specific gaps that students may have. You start with the objective, and then you draft the question.

Nishchal Shukla

3. Each subject is different, and teachers come with subject matter expertise: Language MCQs generally test grammar, vocabulary, comprehension, etc. For language questions, there is a need to source high-quality, bias-free passages which are relevant, age-appropriate and interesting for students.

Mathematics questions focus on problem solving and students’ understanding of concepts. In maths, the errors that students make (procedural errors) are helpful to teachers while making items.

In science, one could test the understanding of topics, integration of various topics, how to design an experiment, etc. The availability of contexts or real life application of those concepts will help teachers.

Q3: EI works with many states and teachers to build capacity for high-quality assessments. What are the major wins and gaps in institutional capacity for making high-quality questions? How can these challenges be solved?

Nishchal: In a lot of states we work with, there is a good intention for developing high-quality questions. Some states have good processes in place to filter/screen item developers who come into this process. Some other states have engaged a set of people over a period of time instead of doing workshops with new groups of experts every time. Few states have incorporated a certain percentage of higher-order questions in their existing tests.

One area where progress remains to be seen is the state’s systems for question creation — it could be in the form of a question-making portal where questions can be made, reviewed and finalised; and all comments are recorded. A certain time commitment (say 5 years) from the people who are involved in this process, and investment over that period from the state, are needed to build institutional capacity for making good questions.

Q4: What approach should be followed for building high quality item banks? What’s worked in the past, (Eg. cascaded training, large webinars, picking the best people and building capacity in them, item writing competitions, etc.)

Nishchal: First, we need to have a fixed set of people engage with item creation over a long period of time. This will also include working on specific sub-tasks within item creation like reviewing questions. Second, we need to ensure that people engage with workshops, creating questions, reviewing them, etc., with rigour and persistence.

One area where progress remains to be seen is the state’s system for question creation — it could be in the form of a question-making portal where questions can be made, reviewed and finalised; and all comments are recorded.

Nishchal Shukla

Q5: The process of building better assessments should lead back to improvements in the classroom. Is that the process currently? How do we make that loop stronger?

Nishchal: We need to look at the granularity of the assessment data that is captured. If you’re only capturing overall scores, the only analysis you will be able to do is which school/district is performing well. To unleash the power of MCQs, you can capture data at the level of every student and every question to understand which child harbours what misconception. Every assessment designer, large-scale or classroom, should think about what they can tell the teacher at the end of the day about their classroom’s misconceptions and areas of improvement.

Q6: What is your one hope and one worry with regards to item development?

Nishchal: Hope: With NEP 2020, we’re able to make that shift in assessments at all levels – district to nation. Worry: Question creation process might get underestimated. If we don’t focus on building the

Keywords

Authored by

Aditi Nangia

Senior Project Lead, CSF

Share this on